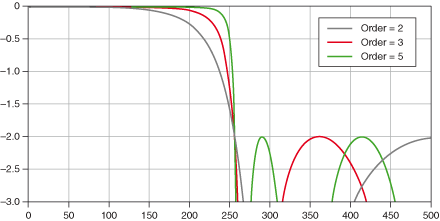

The graph is fine. A chebychev type 2 design is parameterized by the cutoff (here at ~260 or so?), the stop-band ripple (here at -2.0; this looks like a logarithmic scale, but no idea what the base is) and order... and what we see here is that as the order increases, the transition gets steeper but the ripple stays the same. This is what we expect, since the ripple is a design parameter.jrmoserbaltimore wrote: ↑Mon Jan 01, 2024 3:06 pm Considering the shape of the Chebyshev 2…wait, this can't be right?

...okay I'm just going to assume that graphic's wrong, multiplying the order 2 output by the order 3 output does not give the order 5 output shown, that can't be right, unless an order 2 outputting into an order 3 doesn't create an order 5 filter.

For "optimal designs" (Bessel, Butterworth, Cheb 1/2, Elliptic...) you generally cannot design higher order filters by stacking lower order designs, because the result would no longer be optimal in the desired sense. For example, in the case of Butterworth the poles are placed evenly on a half-circle (on Laplace s-plane in continuous time; the discrete-time placemenst after BLT are still on a circle, 'cos BLT is a Möbius-transform that maps circles and lines to circles and lines, but it's not quite a half-circle... but like you usually do these designs in continuous-time and then just use BLT to convert, see below for a remark about that). The formulas for Chebychev can be found in https://en.wikipedia.org/wiki/Chebyshev_filter for example (they look much worse than they actually are).

There's two different notions of stability you need to worry about. The first one is easy: a causal IIR filter is stable if all the poles (roots of the transfer function denominator) are inside the z-plane unit circle (ie. |z|<1) or in the continuous-time case on the negative half-plane of the Laplace s-plane (and BLT maps negative half-plane of Laplace s-plane to the z-transform unit disc, so when using BLT stability is preserved exactly provided the pre-warping frequency is below Nyquist). All the classic filter designs are stable in this sense for arbitrary orders.Is an order 5 Chebyshev stable? An order 5 filtering the output of an Order 3 looks interesting, along with potentially moving the cutoff point between the two filters, assuming that graphic is actually an accurate representation.

The other issue is numerical stability, which depends on your chosen implementation topology and numerical precision. Basically the issue is that once we throw in rounding errors during processing, the poles might not be quite where they are supposed to be anymore and what happens to them (and whether rounding errors can make a stable filter unstable; with some topologies it's possible to arrange any rounding errors to always result in more damping rather than less) depends on the filter topology. Usually the first IIR filter topologies you encounter are the direct form topologies, but these are not exactly great in terms of numerical behaviour (read: they are terrible) and for direct form implementation you almost always want to decompose your filter into series (or parallel) 2nd order sections (+ left-over 1st order section for odd orders).

Decomposing a filter is not much of a problem with a design such as Butterworth or Cheb 2 that you'd generally build by pole placement anyway, you just group the complex conjugate pairs together and that's your 2nd order sections. Some care must be taken when doing BLT on individual 2nd order sections, because all sections must use the same frequency pre-warping constant, but for designs other than Butterworth this might not be the "pole frequency" of the individual biquads.

Other topologies can be more stable though, which is why I mentioned lattice-ladders above, 'cos those can usually tolerate higher orders much better... but then you need a bit more math to convert to reflection coefficients which potentially loses precision if you need to multiply the poles together first... so like.. idk.. cascade of 2nd order sections is usually the easy way to build stable high order filters.